> boring bots

[2025-10-16 11:20:00]

When you hear “AI Agent,” what comes to mind? Do you think of Hugo Weaving’s “Agent Smith” from The Matrix? Perhaps you think about the robots from an Asimov novel, or even something straight out of Terminator?

Well, thankfully, reality is far less dramatic.

Agentic AI is the new buzzword in the industry. For every new tech article you read on the internet, there is a good chance that at least half of them talk about agentic AI at some point. And there is a good reason for it: this technology is evolving at an impressive rate, and it is hard to keep track of everything that is going on.

As a technologist and sci-fi enthusiast, this is a topic that captures my attention, especially because I get to work with mainframes (the coolest machines anyone could ask for) as part of my daily job. So I can’t help wondering if a new technology will find its way into the big iron.

For this reason, this will be yet another blog about agentic AI, but bear with me for a second, I will make it worth your while. In the next few paragraphs, I will do my best to digest a lot of what has been happening over the past months and, most importantly, share my thoughts on whether we will see AI agents wandering into the EBCDIC jungles of the mainframe.

First things first: What is Agentic AI?

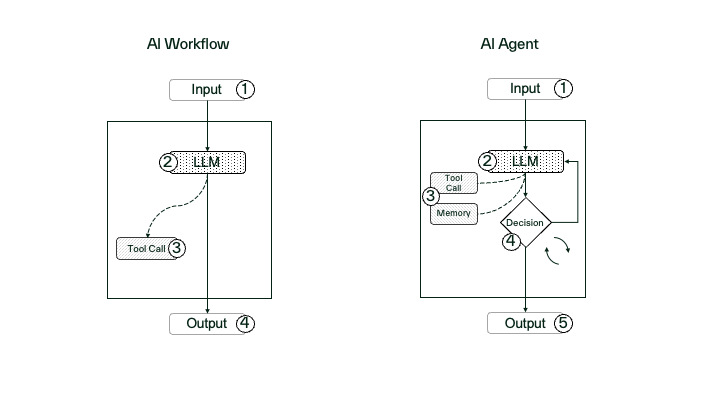

Let’s make sure that everybody is on the same page about what agentic AI is and what types of agentic AI systems exist and their use cases. Agentic AI refers to systems that go beyond static automation, enabling AI models (often Large Language Models) to dynamically orchestrate tools, retrieve context, and make decisions in real time. These systems are often divided into two main implementations: Agents and Workflows. An agentic workflow follows a predefined path, a sequence of steps involving calls to a language model and connected tools to achieve a specific goal. On the other hand, an AI agent can adapt to changing conditions, learn from feedback, and operate with a degree of autonomy that mirrors human reasoning.

Understanding the different implementations is critical. Although both methods are agentic, workflows are meant for scenarios where predictability and consistency are important (ideal for repeatable tasks), while agents excel in complex, variable environments where flexibility and contextual awareness are paramount. For example, we could set up a workflow to automate a content creation pipeline, defining a series of sequential tasks such as automated review of text, image processing, and posting on social media. On the other hand, we could set up an AI agent to act as a customer service assistant, capable of dynamically choosing the next action based on its interaction with the user, such as retrieving information about shipping or even escalating the issue to a human if necessary. The diagram below illustrates both cases:

Innovation is a flat circle

As you might have guessed, these concepts are not new at all. Chatbots have been around for many years, and the idea of autonomous systems that take action based on environmental context is much older. From the many reasons (technical and non-technical) for a technology to take off, one of them is the solution design. As previously mentioned, the “brain” for these systems is AI models such as LLMs (Large Language Models). If you’d like to learn more about LLMs and how everything works under the hood, there is an excellent series of videos by 3blue1brown on the topic. Although far from perfect, the recent advancements in the generative AI field are part of what is enabling this agentic AI revolution.

Regardless of the approach, one thing is clear to me: any successful implementation of these technologies in any environment will come down to the refined usage of different architecture patterns. We need agents and workflows together. There is enough nuance in real-world use cases where a hybrid approach will be the key to success or failure.

But as always, the devil is in the details… I guess that’s why systems architecture often tends to resemble witchcraft. (Believe me, I’ve seen architecture diagrams that were nothing short of arcane incantations.)

So, what about the mainframe?

Imagine agents capable of quickly identifying the root cause of issues and fixing them, or even better, analyzing system data and proactively preventing issues from happening in the first place. Agentic workflows could orchestrate and coordinate multiple upgrades simultaneously and automate the testing process. There’s no denying that the opportunity here is gigantic, but we must be cautious. We must do it right. After all, we are talking about the most reliable system on the planet.

As much as I’m excited about the potential of this new technology, I’m not about to set an army of AI agents loose on my mainframe systems overnight. Careful planning and a cohesive strategic direction are key here. This is where the responsible use of AI comes into play. Before we consider implementing these systems, we must have a clear understanding of our expectations and use cases, then set the appropriate guardrails to support our design. The right set of system prompts and policies will guarantee that agents and workflows behave exactly as we expect. Since AI cannot be held accountable for mistakes, it should never make an autonomous decision that could harm a system, a human should always be kept in the loop to approve or deny the actions of AI.

Remember, AI should be used as a force multiplier, never as a replacement for human judgment.

Because every situation in a real-life scenario requires a slightly different approach, a slightly fine-tuned response, we need these systems to be flexible and adaptable, but we cannot and should not ever compromise on consistency.

Boring bots

In many situations in life, consistency is equal to boring. Imagine a restaurant where every dish tastes exactly the same. The first visit might be fine, the second predictable, but by the third, you’re begging for something new. In life, consistency can be dull. In software, it’s divine.

While consistency in life might not be the best thing all the time, this is the exact opposite in the world of software. All we want from our software is consistency. We want it to do exactly what we expect. We want boring, and as you might expect, this rule also applies to AI agents.

So yes, all my agents will be boring and predictable; “No spontaneity for you, that is something reserved for humans only, thank you.”

Nevertheless, it is safe to say that this is yet another chapter in the revolution that generative AI is bringing to our society. Agentic AI is here to stay, and its potential is undeniable, but in the world of mainframes, potential means nothing without precision. The systems that run our most critical workloads demand excellence, consistency, and trust.

In the end, the most revolutionary thing we can build might just be something perfectly, beautifully boring.